CONTRIBUTIONS:

Fabrication, Visual Concept Development, Web Development (p5.js/ml5.js)

TOOLS:

Arduino Nano33 IoT, Cardboard, Paper, Servo Motors, p5.js, Body-tracking Camera (ml5.js)

DURATION:

3 weeks (November 2024)

TEAM:

Olivia Pasian, Paul Van Rijn, Kasper Zhang

The bird in full.

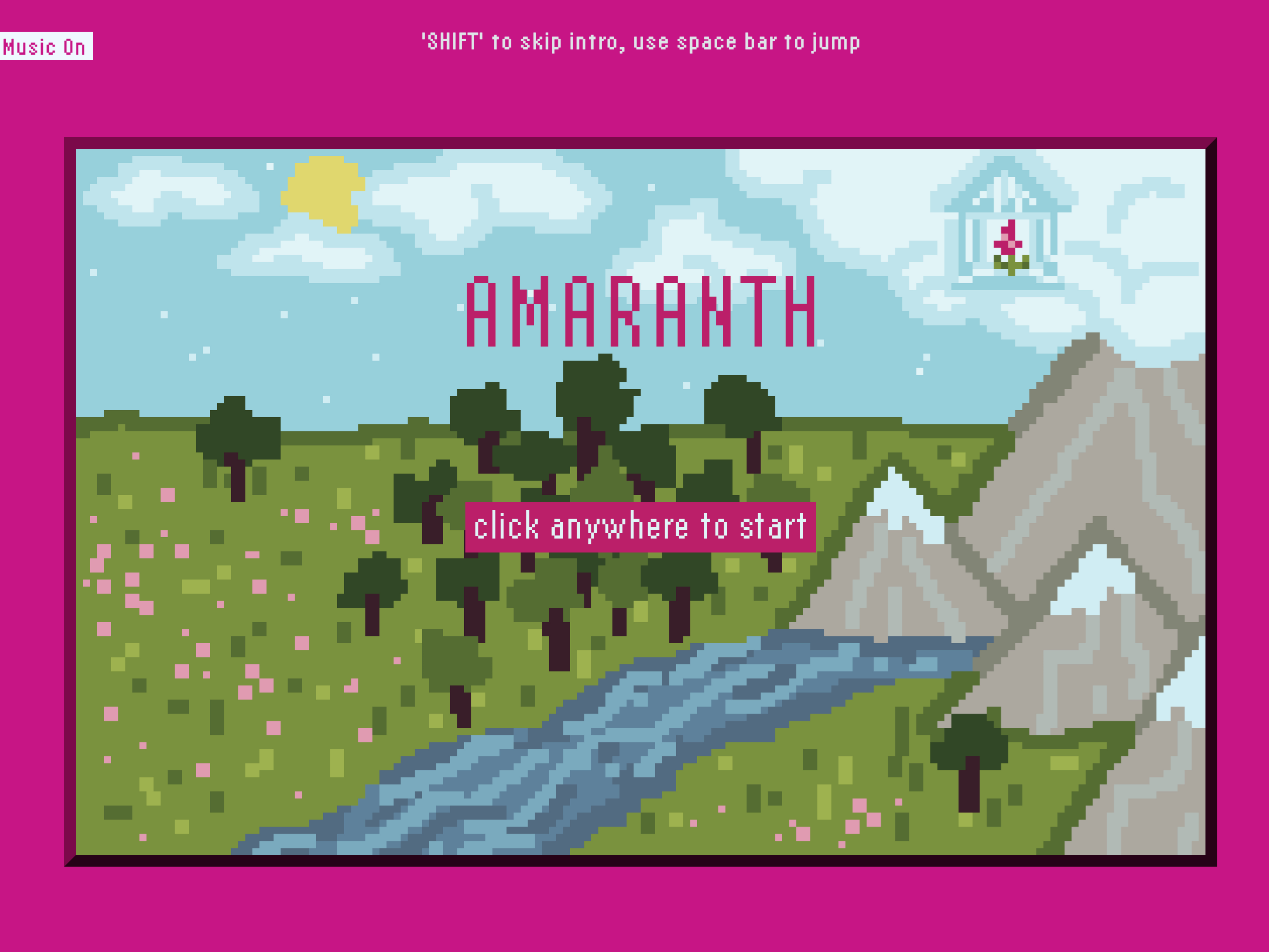

Bird interaction example: the bird was happy to have people around.

Bird interaction example: the bird was uncomfortable with direct staring.

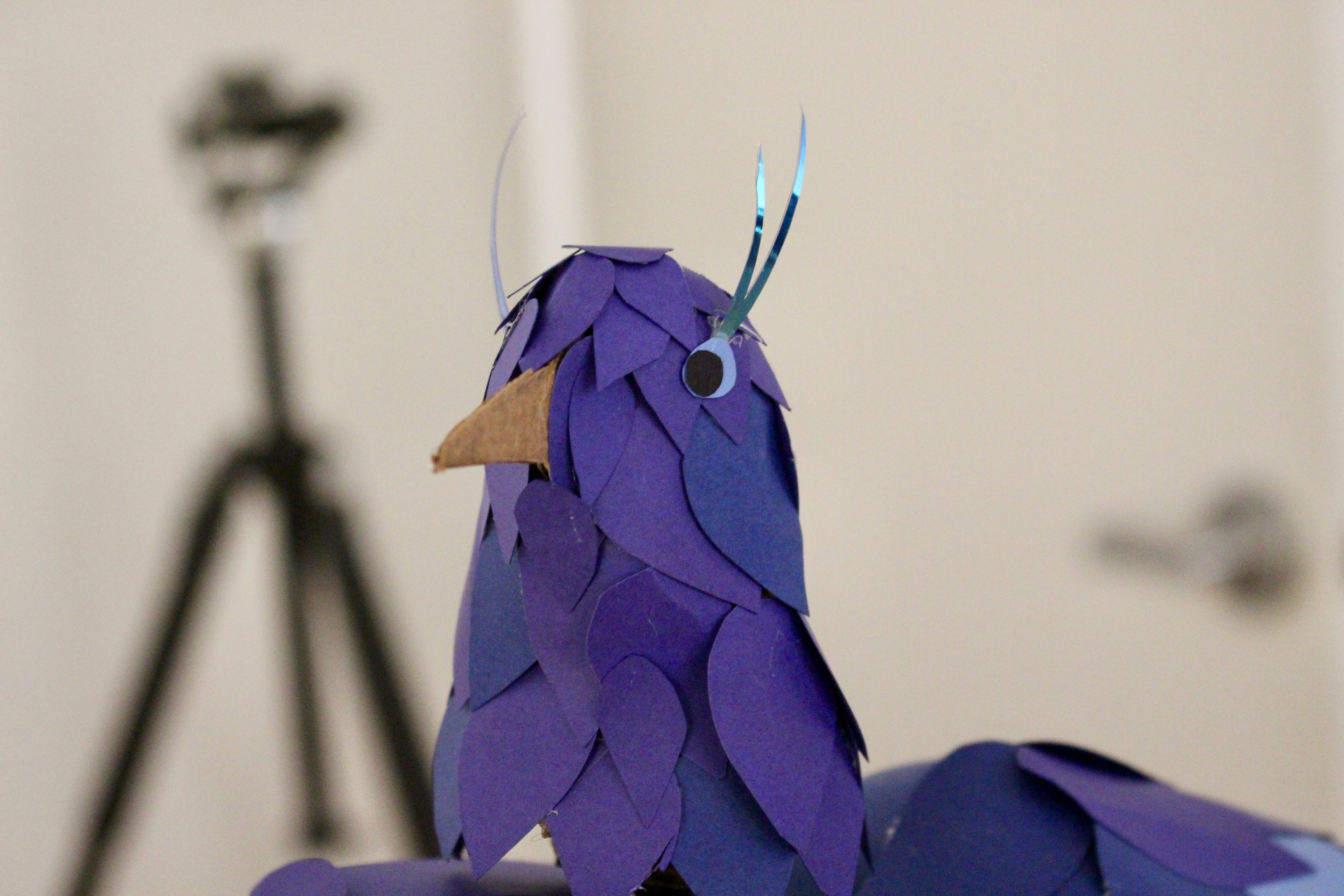

Close-up of bird’s head and feathering details.

Bird interaction example: the bird was calm with only having one visitor.

PROJECT SUMMARY:

"Blue Gaze" is an Arduino project I worked on with Paul Van Rijn and Kasper Zhang for a class assignment.

It is an emotional animatronic bird that looks at the people around it and reacts with an animated dance that expresses its feelings.

It’s made up of a cardboard, paper, servos, an Arduino, and code. The bird uses the camera in its chest and software to count the number of people in its view — and to assess where they are looking. Too many people or prolonged stares make the bird uncomfortable, but it is happy to have a visitor or two.

The emotional responses are not based on a real bird’s behaviour and are not necessarily an anthropomorphic representation, but rather a unique personality with three set states: The first is neutral, where the bird calmly looks around and gently flaps its wings. The second is happy, where the bird excitedly bops up and down and shows off its feathers. The third state is stressed, where the bird fully tilts itself down and anxiously twitches its wings and head.

PROJECT BRIEF:

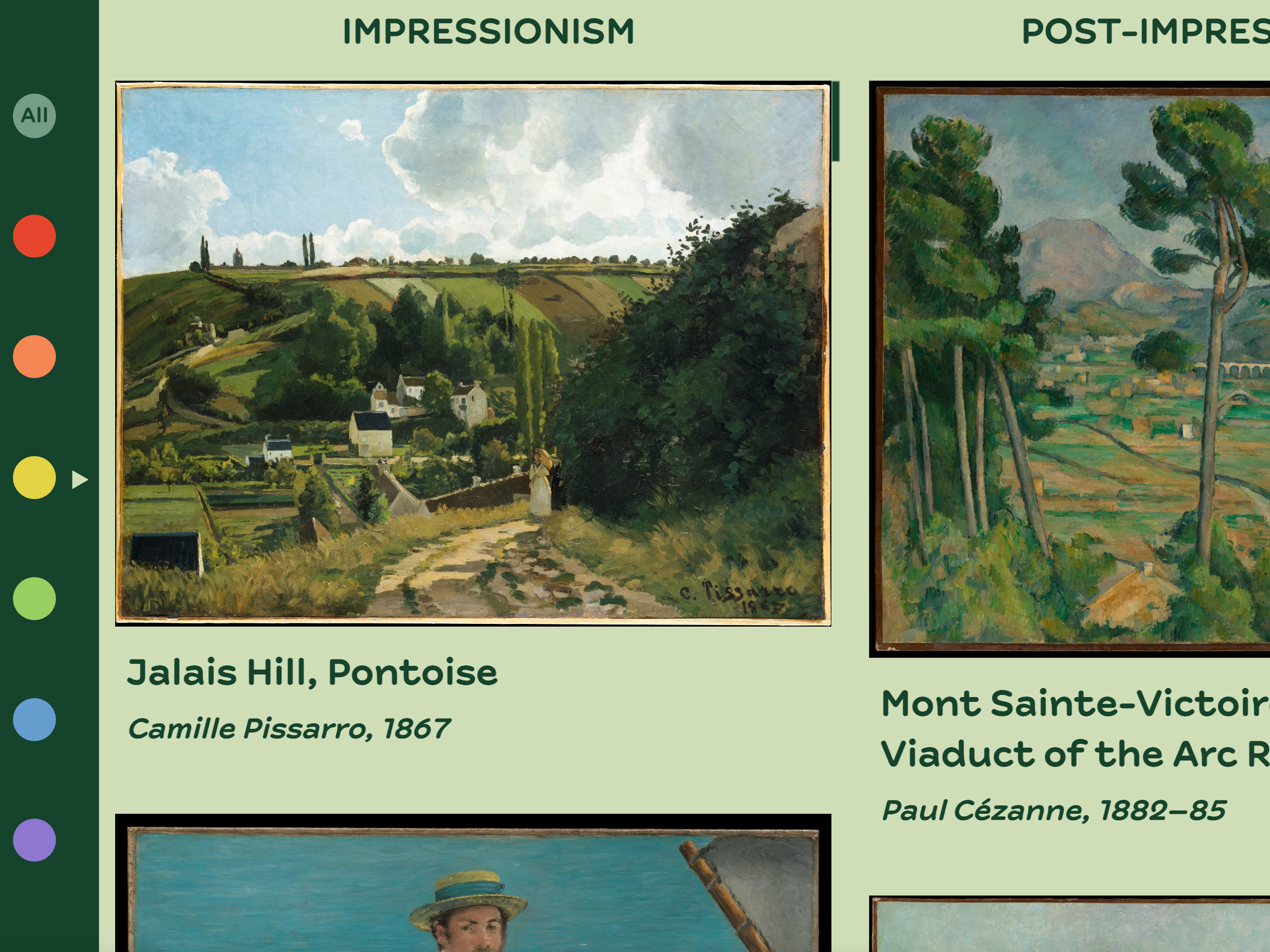

This was a project for a class assignment. The task was to consider how computers "see" and how the way they interpret that data could affect how they control aspects of our physical environments. The assignment was extremely open-ended, only asking that we used the Arduino, a camera, p5.js WebSerial, servo motors, and ml5.js for body-tracking. The goal was to learn how to computationally observe and analyze changing activity in a physical environment and activate physical events in the same environment as a response.

CONCEPT:

“Blue Gaze” seeks to represent the power imbalance and discomfort of an objectifying gaze. It encourages visitors to read the bird’s body language to consider the effect of their interactions.

The primary hardware components of the bird are four servo motors connected to an Arduino Nano33 IOT, mounted on a protoboard which is connected to a laptop via USB cables. The laptop is running p5.js code which sends servo angles to the Arduino, connecting the body tracking data to the servo animations. The p5.js script utilises ml5.js to detect eye and ear distance points to calculate the amount of people, who is facing the camera, and how close visitors are. This data was used to calculate an emotional state using case switching to determine which animated dance to send to the servos. Each dance cycles through an array made up of steps dictating servo angles.

ROLE DISTRIBUTION:

My role in this project was handling the majority of fabrication and visual concept, as well as the computer vision code (working in combination with ml5.js for body tracking). Paul handled the majority of the servo work, Kasper was on the emotional state calculations, and the code was otherwise a combined effort.

EXHIBITIONS:

Image from the exhibition space at the 2024 DF Open Show.